首先导入需要的包

import os

import numpy as np

import cv2

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import pandas as pd

from torch.utils.data import DataLoader, Dataset

import time创建一个读取文件的函数readfile

def readfile(path, label):

# label 是一个 boolean variable,代表需不需要回传 y 值

image_dir = sorted(os.listdir(path))

x = np.zeros((len(image_dir), 128, 128, 3), dtype=np.uint8)

y = np.zeros((len(image_dir)), dtype=np.uint8)

for i, file in enumerate(image_dir):

img = cv2.imread(os.path.join(path, file))

x[i, :, :] = cv2.resize(img,(128, 128))

if label:

y[i] = int(file.split("_")[0]) #根据文件名确定分类

if label:

return x, y

else:

return x设置好我们数据的存放路径,将数据加载进来

# 分別将 training set、validation set、testing set 用 readfile 函数导入

workspace_dir = '/Users/limiaochen/Desktop/ML-李宏毅/数据/hw3/food-11'

print("Reading data")

train_x, train_y = readfile(os.path.join(workspace_dir, "training"), True)

print("Size of training data = {}".format(len(train_x)))

val_x, val_y = readfile(os.path.join(workspace_dir, "validation"), True)

print("Size of validation data = {}".format(len(val_x)))

test_x = readfile(os.path.join(workspace_dir, "testing"), False)

print("Size of Testing data = {}".format(len(test_x)))输出如下:

Reading data

Size of training data = 9866

Size of validation data = 3430

Size of Testing data = 3347训练集有9866张图片,验证集有3430张图片,测试集有3347张图片

接下来对数据增强,就是增加数据量(通过对图片旋转等方式)

通过执行数据增强,你可以阻止神经网络学习不相关的特征,从根本上提升整体性能。可以通过旋转(flips)、移位(translations)、旋转(rotations)等微小的改变,我们的网络会认为这是不同的图片。

# training 时做 data augmentation

train_transform = transforms.Compose([ #包括以下几个变换

transforms.ToPILImage(),

transforms.RandomHorizontalFlip(), # 随机将图片水平翻转

transforms.RandomRotation(15), # 随机旋转图片

transforms.ToTensor(), # 将图片向量化,并 normalize 到 [0,1] (data normalization)

])

# testing 时不需要做 data augmentation

test_transform = transforms.Compose([

transforms.ToPILImage(),

transforms.ToTensor(),

])在 PyTorch 中,我们可以利用 torch.utils.data 的 Dataset 及 DataLoader 來”包装” data,使后续的 training 及 testing 更为方便。

Dataset

Dataset 是 PyTorch 中用来表示数据集的一个抽象类,我们的数据集可以用这个类来表示,至少覆写下面两个方法即可

这返回数据前可以进行适当的数据处理,比如将原文用一串数字序列表示

__len__:数据集大小

__getitem__:实现这个方法后,可以通过下标的方式( dataset[i] )的来取得第 𝑖

i 个数据

Dataloader

Dataloader 就是一个迭代器,最基本的使用就是传入一个 Dataset 对象,它就会根据参数 batch_size 的值生成一个 batch 的数据

class ImgDataset(Dataset): #继承自Dataset

def __init__(self, x, y=None, transform=None):

self.x = x

# label is required to be a LongTensor

self.y = y

if y is not None:

self.y = torch.LongTensor(y)

self.transform = transform

def __len__(self):

return len(self.x)

def __getitem__(self, index):

X = self.x[index]

if self.transform is not None:

X = self.transform(X)

if self.y is not None:

Y = self.y[index]

return X, Y

else:

return X训练过程中,我们采用分批次训练(加快参数更新速度),设置好我们的batch_size大小,这里设置为128,然后包装好我们的数据

batch_size = 128

train_set = ImgDataset(train_x, train_y, train_transform)

val_set = ImgDataset(val_x, val_y, test_transform)

train_loader = DataLoader(train_set, batch_size=batch_size, shuffle=True)

val_loader = DataLoader(val_set, batch_size=batch_size, shuffle=False)数据我们已经构建好了

接着就是利用pytorch来构建我们的模型

利用nn.Conv2d,nn.BatchNorm2d,nn.ReLU,nn.MaxPool2d这4个函数来构建一个5层的CNN

nn.Conv2d:卷积层

nn.BatchNorm2d:归一化

nn.ReLU:激活层

nn.MaxPool2d:最大池化层

卷积层之后进入到一个3层全连接层,最后输出结果

class Classifier(nn.Module):

def __init__(self):

super(Classifier, self).__init__()

# torch.nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding)

# torch.nn.MaxPool2d(kernel_size, stride, padding)

# input 维度 [3, 128, 128]

self.cnn = nn.Sequential(

nn.Conv2d(3, 64, 3, 1, 1), # [64, 128, 128]

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [64, 64, 64]

nn.Conv2d(64, 128, 3, 1, 1), # [128, 64, 64]

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [128, 32, 32]

nn.Conv2d(128, 256, 3, 1, 1), # [256, 32, 32]

nn.BatchNorm2d(256),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [256, 16, 16]

nn.Conv2d(256, 512, 3, 1, 1), # [512, 16, 16]

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [512, 8, 8]

nn.Conv2d(512, 512, 3, 1, 1), # [512, 8, 8]

nn.BatchNorm2d(512),

nn.ReLU(),

nn.MaxPool2d(2, 2, 0), # [512, 4, 4]

)

self.fc = nn.Sequential(

nn.Linear(512*4*4, 1024),

nn.ReLU(),

nn.Linear(1024, 512),

nn.ReLU(),

nn.Linear(512, 11)

)

def forward(self, x):

out = self.cnn(x)

out = out.view(out.size()[0], -1)

return self.fc(out)模型构建好之后,我们就可以开始训练了

model = Classifier().cuda()

loss = nn.CrossEntropyLoss() # 交叉熵损失函数

optimizer = torch.optim.Adam(model.parameters(), lr=0.001) # optimizer 使用 Adam

num_epoch = 30 #迭代次数

#训练

for epoch in range(num_epoch):

epoch_start_time = time.time()

train_acc = 0.0 #计算每个opoch的精度与损失

train_loss = 0.0

val_acc = 0.0

val_loss = 0.0

model.train() # 确保 model 是在 train model (开启 Dropout 等...)

for i, data in enumerate(train_loader):

optimizer.zero_grad() # 用 optimizer 将 model 参数的 gradient 归零

train_pred = model(data[0].cuda()) # 利用 model 进行向前传播,计算预测值

batch_loss = loss(train_pred, data[1].cuda()) # 计算 loss (注意 prediction 跟 label 必须同时在 CPU 或是 GPU 上)

batch_loss.backward() # 利用 back propagation 算出每个参数的 gradient

optimizer.step() # 以 optimizer 用 gradient 更新参数值

train_acc += np.sum(np.argmax(train_pred.cpu().data.numpy(), axis=1) == data[1].numpy())

train_loss += batch_loss.item()

model.eval()

with torch.no_grad():

for i, data in enumerate(val_loader):

val_pred = model(data[0].cuda())

batch_loss = loss(val_pred, data[1].cuda())

val_acc += np.sum(np.argmax(val_pred.cpu().data.numpy(), axis=1) == data[1].numpy())

val_loss += batch_loss.item()

#将结果 print 出来

print('[%03d/%03d] %2.2f sec(s) Train Acc: %3.6f Loss: %3.6f | Val Acc: %3.6f loss: %3.6f' % \

(epoch + 1, num_epoch, time.time()-epoch_start_time, \

train_acc/train_set.__len__(), train_loss/train_set.__len__(), val_acc/val_set.__len__(), val_loss/val_set.__len__()))

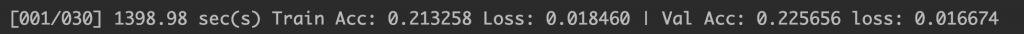

我的电脑没有N卡,所以没法用cuda加速,只能用cpu跑,所以特别慢,一次迭代就要1400秒,我用有显卡的台式跑了一下,结果如下所示:

[001/030] 27.24 sec(s) Train Acc: 0.237888 Loss: 0.017894 | Val Acc: 0.269096 loss: 0.016925

[002/030] 21.78 sec(s) Train Acc: 0.346949 Loss: 0.014638 | Val Acc: 0.387172 loss: 0.014022

[003/030] 21.92 sec(s) Train Acc: 0.398439 Loss: 0.013529 | Val Acc: 0.373761 loss: 0.013851

[004/030] 21.91 sec(s) Train Acc: 0.437563 Loss: 0.012768 | Val Acc: 0.324198 loss: 0.015293

[005/030] 22.03 sec(s) Train Acc: 0.462700 Loss: 0.012086 | Val Acc: 0.451603 loss: 0.012170

[006/030] 21.90 sec(s) Train Acc: 0.501318 Loss: 0.011245 | Val Acc: 0.393878 loss: 0.014743

[007/030] 21.87 sec(s) Train Acc: 0.524427 Loss: 0.010855 | Val Acc: 0.421574 loss: 0.013640

[008/030] 21.88 sec(s) Train Acc: 0.558889 Loss: 0.010173 | Val Acc: 0.334111 loss: 0.017887

[009/030] 21.88 sec(s) Train Acc: 0.571052 Loss: 0.009840 | Val Acc: 0.484257 loss: 0.012081

[010/030] 21.88 sec(s) Train Acc: 0.601155 Loss: 0.009098 | Val Acc: 0.479009 loss: 0.012725

[011/030] 21.91 sec(s) Train Acc: 0.607642 Loss: 0.008935 | Val Acc: 0.516910 loss: 0.010935

[012/030] 21.90 sec(s) Train Acc: 0.632982 Loss: 0.008324 | Val Acc: 0.513411 loss: 0.011666

[013/030] 21.91 sec(s) Train Acc: 0.652139 Loss: 0.007836 | Val Acc: 0.581633 loss: 0.009890

[014/030] 21.92 sec(s) Train Acc: 0.674032 Loss: 0.007296 | Val Acc: 0.572886 loss: 0.010333

[015/030] 21.90 sec(s) Train Acc: 0.688830 Loss: 0.007036 | Val Acc: 0.487755 loss: 0.013652

[016/030] 21.90 sec(s) Train Acc: 0.700892 Loss: 0.006790 | Val Acc: 0.597376 loss: 0.010099

[017/030] 21.89 sec(s) Train Acc: 0.719542 Loss: 0.006308 | Val Acc: 0.567930 loss: 0.010902

[018/030] 21.95 sec(s) Train Acc: 0.732009 Loss: 0.006244 | Val Acc: 0.560641 loss: 0.011560

[019/030] 21.87 sec(s) Train Acc: 0.747213 Loss: 0.005798 | Val Acc: 0.468805 loss: 0.015137

[020/030] 21.92 sec(s) Train Acc: 0.738192 Loss: 0.005893 | Val Acc: 0.618950 loss: 0.009629

[021/030] 21.84 sec(s) Train Acc: 0.751571 Loss: 0.005559 | Val Acc: 0.634985 loss: 0.009336

[022/030] 21.84 sec(s) Train Acc: 0.767484 Loss: 0.005285 | Val Acc: 0.603207 loss: 0.010479

[023/030] 21.81 sec(s) Train Acc: 0.782789 Loss: 0.004811 | Val Acc: 0.658601 loss: 0.009200

[024/030] 21.84 sec(s) Train Acc: 0.810055 Loss: 0.004360 | Val Acc: 0.420408 loss: 0.019338

[025/030] 21.85 sec(s) Train Acc: 0.801642 Loss: 0.004485 | Val Acc: 0.658017 loss: 0.009225

[026/030] 21.82 sec(s) Train Acc: 0.833671 Loss: 0.003745 | Val Acc: 0.643440 loss: 0.010182

[027/030] 21.84 sec(s) Train Acc: 0.832860 Loss: 0.003715 | Val Acc: 0.634694 loss: 0.010398

[028/030] 21.83 sec(s) Train Acc: 0.842489 Loss: 0.003504 | Val Acc: 0.638192 loss: 0.011266

[029/030] 21.82 sec(s) Train Acc: 0.839651 Loss: 0.003549 | Val Acc: 0.630029 loss: 0.010706

[030/030] 21.82 sec(s) Train Acc: 0.855159 Loss: 0.003248 | Val Acc: 0.667638 loss: 0.009832